SlideSpecs

Automatic and Interactive Presentation Feedback Collation

ACM Conference on Intelligent User Interfaces — IUI 2023

Presenters often collect audience feedback through practice talks to refine their presentations. In formative interviews, we find that although text feedback and verbal discussions allow presenters to receive feedback, organizing that feedback into actionable presentation revisions remains challenging. Feedback can be redundant, lack context, or be spread across various emails, notes, and conversations. To collate and contextualize both text and verbal feedback, we present SlideSpecs. SlideSpecs lets audience members provide text feedback (e.g., ‘font too small’) along with an automatically detected context, including relevant slides (e.g., Slide 7) or content tags (e.g., ‘slide design’). In addition, SlideSpecs records and transcribes the spoken group discussion that commonly occurs after practice talks and facilitates linking text critiques to their relevant discussion segments. Finally, SlideSpecs lets the presenter review all text and spoken feedback in a single context-rich interface (e.g., relevant slides, topics, and follow-up discussions). We demonstrate the effectiveness of SlideSpecs by applying it to a range of eight presentations across computer vision, programming notebooks, sensemaking, and more. When surveyed, 85% of presenters and audience members reported they would use the tool again. Presenters reported that using SlideSpecs improved feedback organization, provided valuable context, and reduced redundancy.

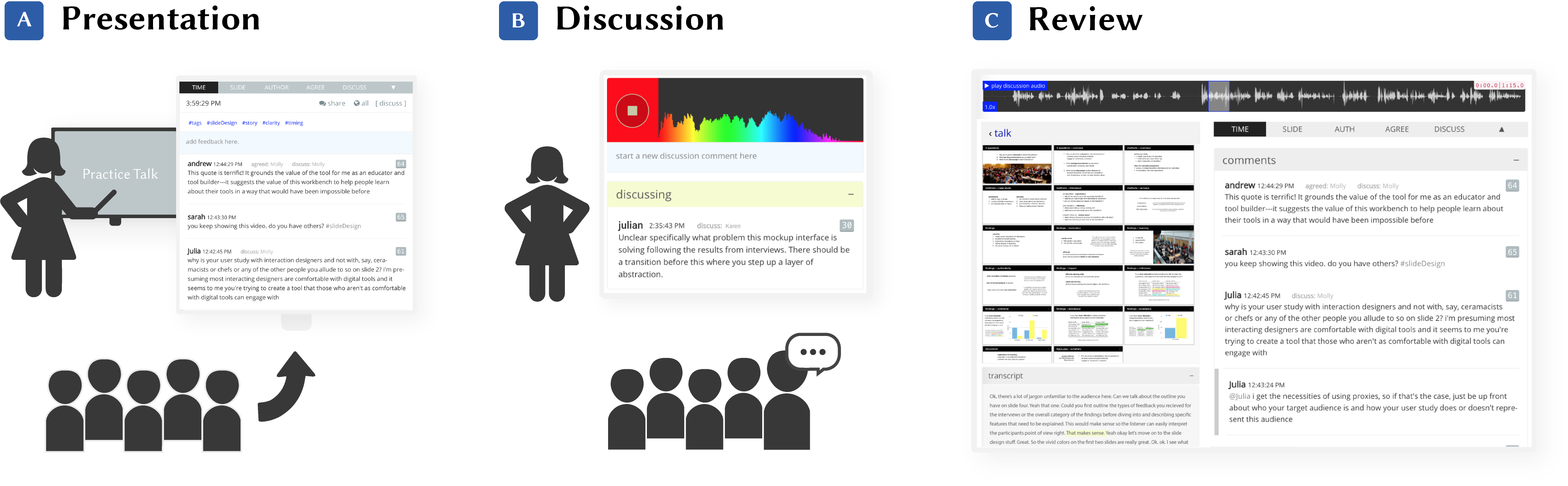

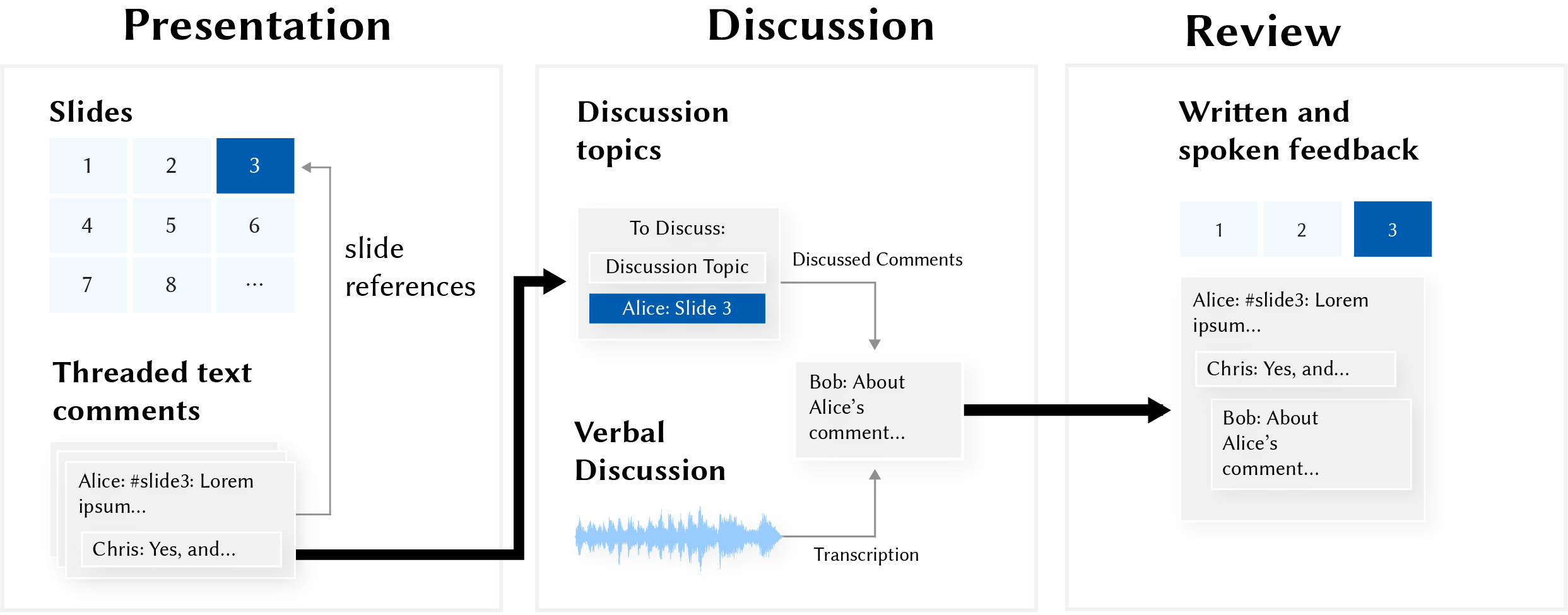

SlideSpecs supports three phases (presentation, discussion, and review) and three distinct participant roles (presenter, audience, and facilitator). The presenter delivers their practice presentation while audience members and the facilitator share comments using the Presentation Interface (A). During the discussion (B), the presenter and audience discuss feedback while the facilitator annotates the discussion. The presenter later reviews all feedback at their leisure in the Review Interface (C).

During the presentation, audience members write critiques and respond to existing critiques. Audience members may also include context with their text critiques (e.g., specific slides, tags for feedback type). The threaded text comments flagged for discussion appear in the Discussion interface. During the discussion, the facilitator marks which topics are being discussed, matching existing text critiques where possible. SlideSpecs records and transcribes the discussion audio, allowing the presenter to jointly review the collated text and verbal feedback.

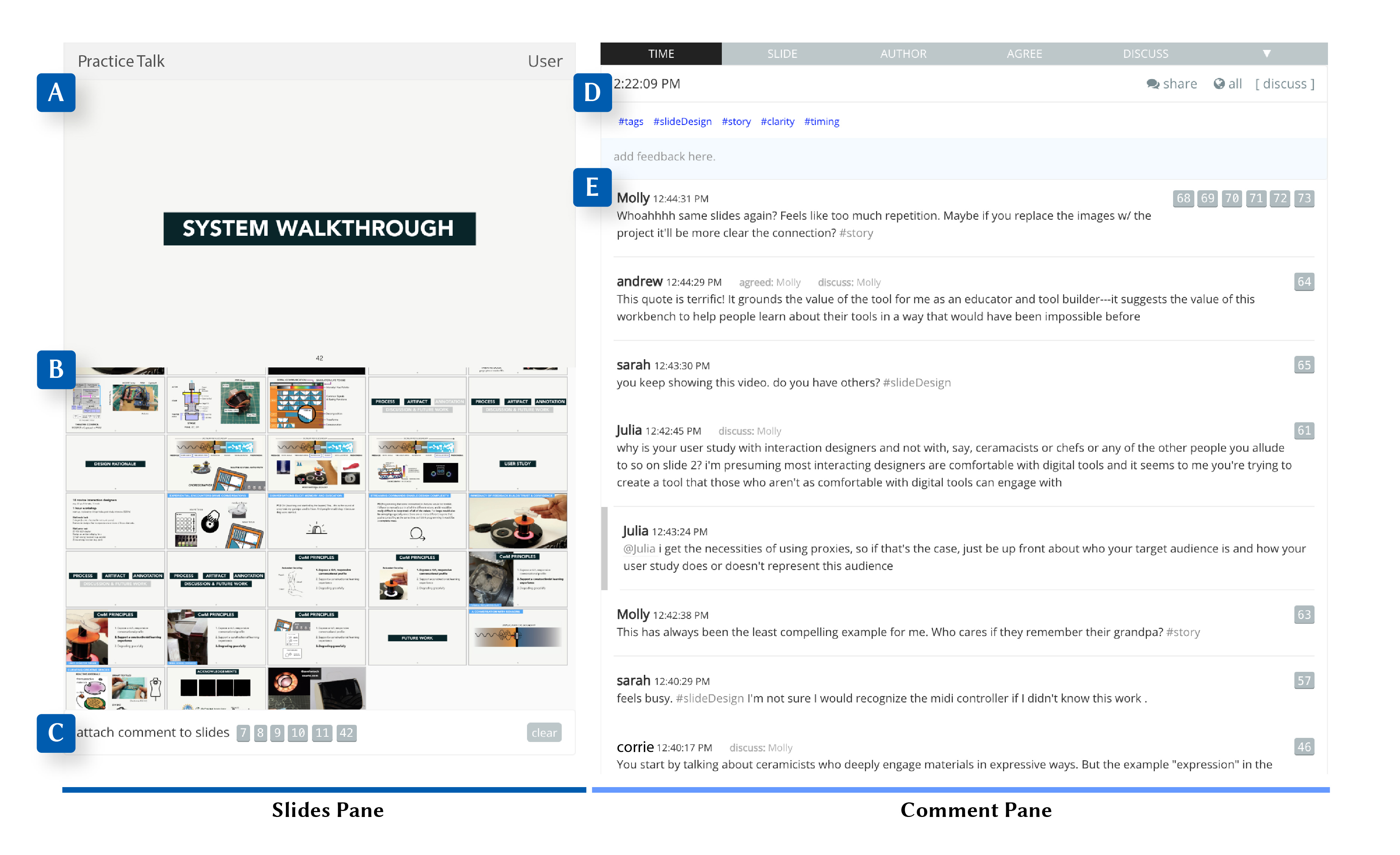

The Feedback interface. In the Slides Pane, a detailed slide view is shown (A), and hovering over the smaller thumbnails (B) updates the large image, current slide (A), allowing users to easily scan and inspect talk slides. Audience members can select thumbnails to attach comments to designated presentation slides (C). In the Comments Pane, the interface features different ways of sorting the comments (D), a list of tags contained by comments, and a text area entering feedback. Last, the interface displays a live-updating list of the audience’s comments (E), which includes comment metadata (i.e., comment author, creation time, and slides referenced).

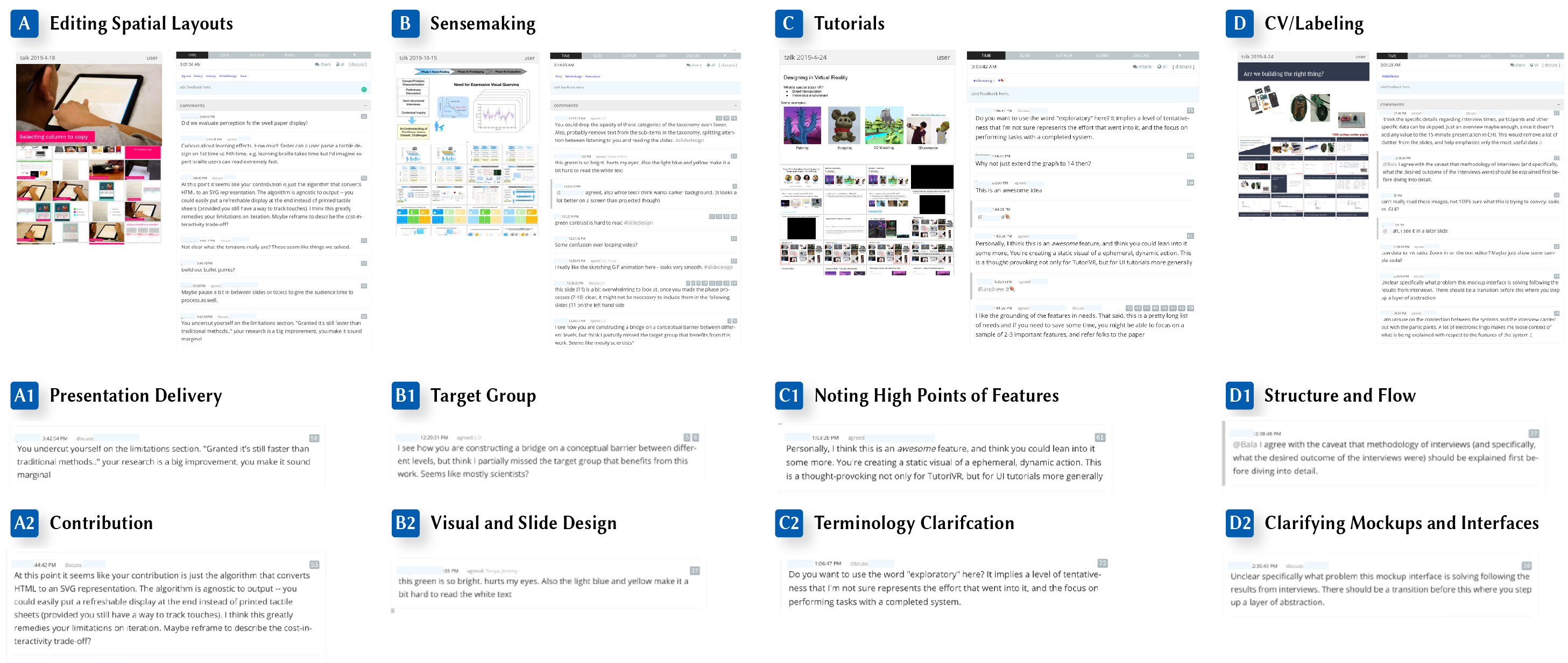

Results. Presenters used SlideSpecs for a wide variety of practice talks (A-D). They received feedback concerning many aspects of their presentation, from slide design, delivery, and requests for clarification. We highlight select feedback from four different practice presentations. These comments showcase topics that the audience’s feedback addressed; eight comments (A1-D2) are shown in detail alongside a rough characterization of what part of the presentation the comment addresses. More details can be found in our full paper (PDF).

← Back